Leadership and capacity-building programmes are very good at changing people. The difficulty comes in being able to prove it.

A typical leadership programme might last a year and over that time period participants go through an intensive journey: coaching, peer learning, practical training on how to use new frameworks. Usually the outcomes of that journey are well defined in terms of the leadership competencies that participants develop, but when it comes to measuring everything that happens afterwards, that's where things get trickier.

One, two, three years on, how do you trace a line from those inner shifts to what happens in organisations and communities? And how do you do that in a way that’s both rigorous and simple enough for a global team to actually use?

This article is about that gap and some practical ways to close it.

From 'stronger leaders' to specific, observable change

Leadership programmes often describe their impact in phrases like:

- Stronger leadership

- Increased capacity to create social impact

- Greater ability to achieve organisational goals

Those statements are useful at the level of vision but they’re too broad to measure.

A more practical starting point is to go back to the anecdotes:

- What do leaders actually say has changed for them?

- What do programme staff see in their behaviour during and after the programme?

- What do their organisations or communities notice?

From those stories, you can usually surface observable changes that are far more concrete, for example:

- “I make difficult decisions faster because I have a clearer process.”

- “When I feel stuck, I now have three people I can call who will challenge my thinking.”

- “I’m more likely to initiate brave conversations with partners or funders.”

- “I spend more time listening to my team and less time rushing to solutions.”

Those become the raw materials for your indicators.

Why “How good a leader are you?” is the wrong question

One of the traps leadership programmes fall into is asking people to rate themselves in very general terms:

“On a scale of 1–10, how effective a leader are you?”

The problem is that self-perception is volatile. At the beginning of a programme, many participants will confidently choose 7 or 8 out of 10. A few months in, once they’ve encountered new frameworks and honest feedback, they may revise that right down.

On paper, it looks like the programme has reduced their leadership.

In reality, they’ve just become more self-aware.

A better approach is to focus on things that are observable in practice, for example:

- “When you have a difficult decision to make, to what extent do you have a trusted support network you can turn to?”

- “In the last three months, how often have you initiated a difficult conversation that you would previously have avoided?”

- “To what extent do you feel able to act when you see an injustice affecting the community you serve?”

These are still subjective ratings, but they’re anchored in actions and situations, not in abstract self-perception. That makes them more stable over time and more useful for programme improvement.

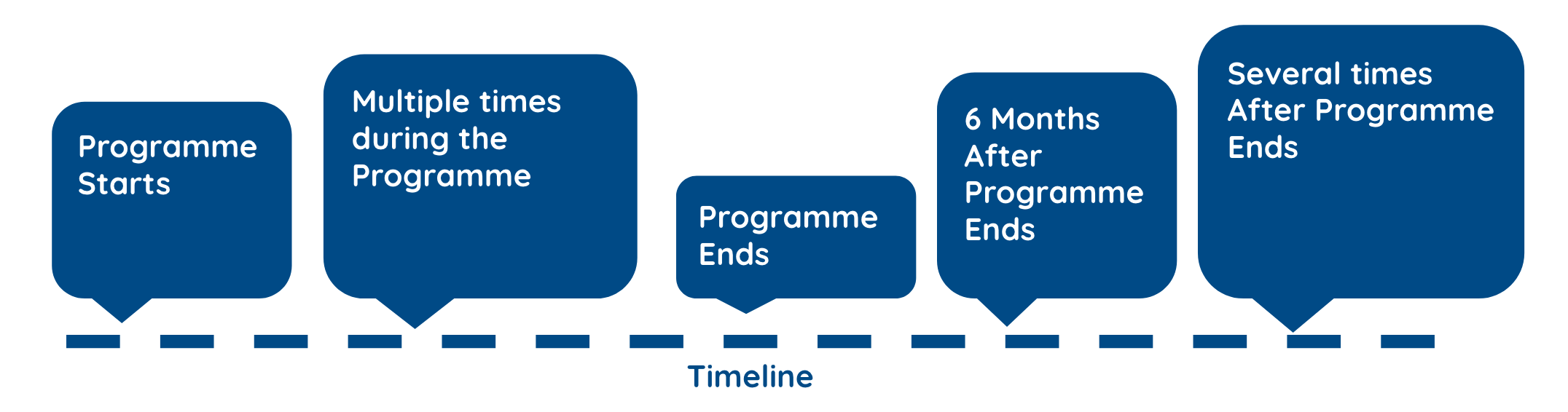

Designing a simple before–during–after journey

Measuring “distance travelled” doesn’t have to be complicated.

For a leadership or capacity-building programme, a practical pattern might look like:

- Baseline at shortlisting or induction

- Take advantage of the goodwill and engagement at this stage.

- Ask 5–10 carefully chosen questions that reflect the key outcomes you’re aiming for.

- Check-in during the programme

- Repeat the same core questions once or twice during the nine months.

- Use the results not just for reporting, but to adjust facilitation and support.

- Post-programme at graduation

- Ask the same questions again, plus a small number about immediate changes in practice.

- Alumni follow-up

- Repeat the short survey annually for leaders and (at a lower frequency) for shortlisted-but-unsuccessful applicants.

- Over time, this gives you a sense of how long changes are sustained, and a light-touch comparison group.

The key is consistency: the same person, answering the same question, at different points in time. That’s what allows you to see genuine movement rather than one-off snapshots.

From outcomes to impact: three lenses for the long term

Let's take three broad types of longer-term impact that might emerge from a leadership programme:

- Scaling organisational impact

- Are leaders better able to grow or stabilise their organisations?

- Are they building stronger partnerships, raising more funding or increasing reach?

- Amplifying leadership in others

- How many people are leaders now mentoring, coaching or training with what they’ve learned?

- Are they sharing frameworks and tools within their teams, networks or communities?

- Shifting systems and narratives

- Are leaders better equipped to advocate, influence policy or change how issues are framed?

- Are they playing visible roles in coalitions or movements?

These are not metrics you can fully capture with a simple tick-box survey. But they can be explored systematically.

A good route is:

- Qualitative first:

- Conduct a small set of semi-structured interviews with alumni from different years and contexts.

- Focus on stories of change: what’s different in their work, their organisations, and their communities?

- Cluster themes:

- Code the transcripts and look for recurring patterns under those three lenses (scaling, amplification, systems).

- Identify 6–10 common types of change that appear again and again.

- Then design indicators:

- For some themes, design survey questions and scales.

- For others, you might track counts (e.g. people mentored, policies influenced) or use structured qualitative prompts.

You don’t need to get it “perfect” on day one. The point is to ground your long-term impact story in real experiences, then build measurement on top of that.

Using comparison groups you already have

Many programmes already have a built-in comparison group: people who applied, were shortlisted, but weren't selected to join the programme.

When handled with care and respect, this group can help you see:

- Which changes are part of the general trajectory of committed social leaders, and

- Which changes seem to be associated with participation in your programme.

That doesn’t give you a perfect randomised controlled trial, but it doesn’t need to. A small annual survey of shortlisted-but-unsuccessful applicants can add nuance and credibility to your impact story without needing to create a whole new research project. It's a helpful counterfactual that becomes part of the story of your impact.

Start with one focused experiment

If you’re running a leadership programme that’s been in place for several years and/or operates in several locations, it can be overwhelming to think about retrofitting an impact framework. But you don’t have to. Instead you can start with something small and manageable, for example:

- Pick one country or region.

- Choose one or two cohorts (recent or past).

- Interview a handful of alumni about long-term impact.

- Turn what you learn into a short pilot survey.

- Test it with that cohort and see what you get back.

If the experiment produces insight and energy, it becomes much easier to make the case for a wider, more coordinated approach.

Where Makerble fits

Makerble was originally built to help organisations move beyond anecdotes and spreadsheets while keeping their work rooted in human stories.

For leadership programmes, that means:

- Turning your existing competencies and anecdotes into clear, trackable indicators

- Automating before–during–after surveys so you can see distance travelled over time

- Bringing qualitative and quantitative evidence together in one place

- Making it easy to compare cohorts, regions or themes without duplicating data

If you’re wrestling with questions like “How do we show what lasts?” or “How do we capture impact beyond the nine months?”, we’d be happy to explore this with you – whether that’s helping design the framework, setting up the system, or both. Email matt.kepple@makerble.com to find out more.

.jpg)

.jpg)

.png)

.png)

.png)

%208.png)

.png)